Fairness in Machine Learning: An Alternative Approach

The recent explosion of large language models such as ChatGPT has increased questions about the biases in their results. But this problem is not new, as society has become increasingly dependent in recent years on algorithmic systems and the output they generate. Such systems have the power to offer efficient, data-driven decisions – particularly to binary questions (that is, questions with one of two possible answers) that are highly complex or cognitively demanding. Yet while the digitization of decision-making may seem to offer a more objective process than human judgment, this has played out quite differently in practice. From facial recognition software to employment and interview pre-screening, algorithms have been found to reinforce systemic biases, exacerbating existing asymmetries and inequities rather than reducing them. Computer scientists have approached this problem by trying to design a variety of “fairness principles” that can be built into decision-making models; a consistent application of these principles has not yet been made possible, however, and quite often the fairness principles put forward are mutually contradictory.

From facial recognition software to employment and interview pre-screening, algorithms have been found to reinforce systemic biases, exacerbating existing asymmetries and inequities rather than reducing them.

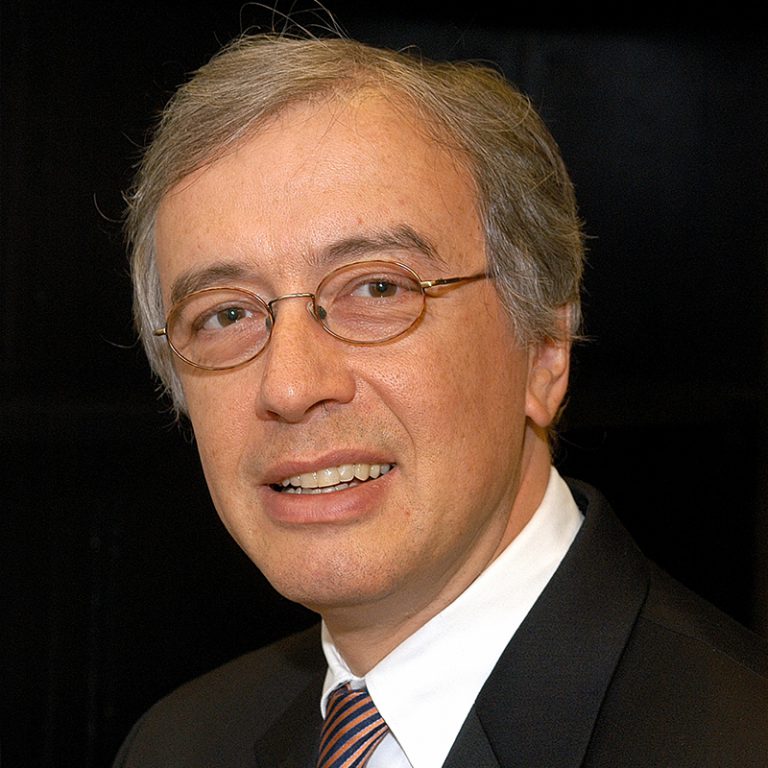

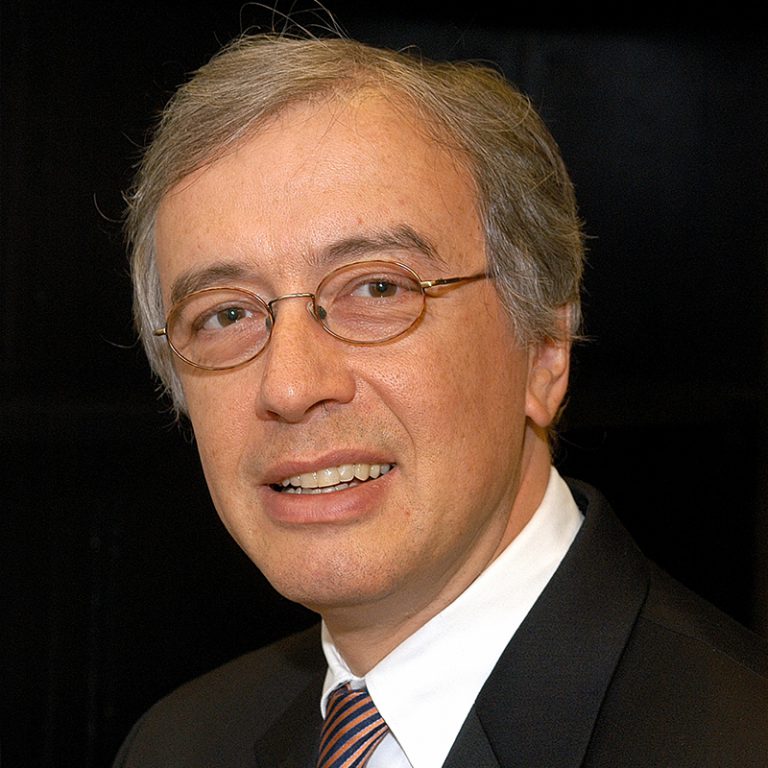

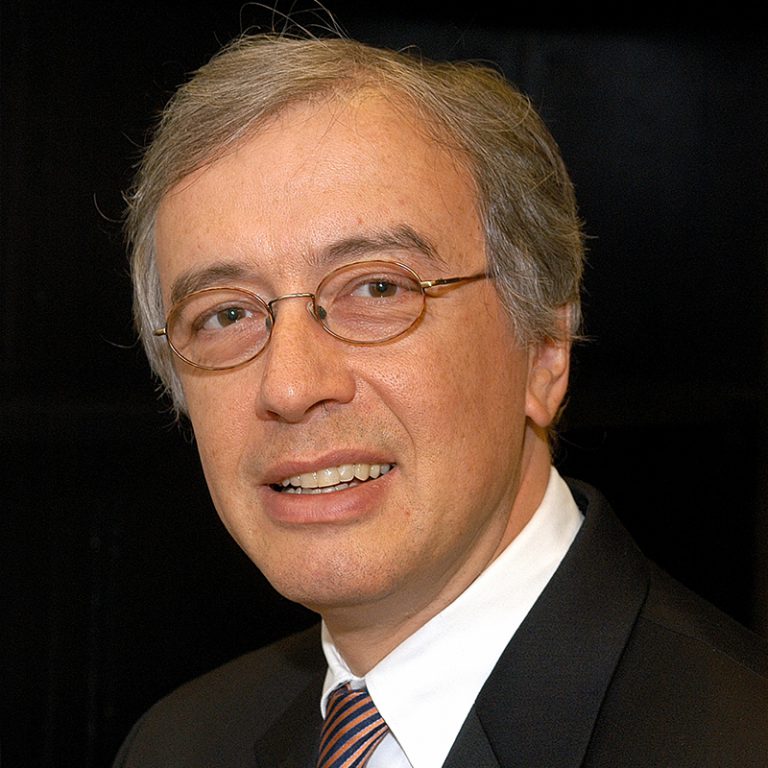

Recent research from Eric Ghysels, Edward M. Bernstein Distinguished Professor of Economics at the University of North Carolina-Chapel Hill, professor of finance at UNC Kenan-Flagler Business School and faculty director of Rethinc. Labs, offers an alternative approach to implementing fairness in machine learning models. Ghysels and his co-authors – Andrii Babii, Xi Chen and Rohit Kumara – approach the problem by attaching explicit costs to a model’s classification errors and accounting for different types of costs. More damaging costs are weighted more strenuously than less damaging ones; as the algorithm is trained, it internalizes these costs that reflect the asymmetries its output can create. Put more simply, the algorithm is trained in such a way that its decision-making processes account for context and asymmetries, rather than approaching each decision uniformly. This is a very different mindset from the traditional machine learning setup, where one-size-fits-all symmetric cost function blind to gender, ethnicity or other minority is used. It is the mindset of economists who think in terms of cost-benefit analysis and utility functions.

This may seem fuzzy in the abstract, which is why the authors conclude with an analysis of their model in the context of pretrial detention decisions. The pretrial detention decision process involves a judge’s assessment of whether a given defendant should be detained before the trial or be let out on bail. Either decision can incur economic costs; detaining a defendant necessarily involves the cost of housing and imprisoning an additional inmate, while releasing a defendant before trial may cause that defendant to flee or else commit further offenses. The challenge is that the existing algorithmic model (COMPAS, or Correctional Offender Management Profiling for Alternative Sanctions) has been shown to have a racial bias – it tends to incorrectly predict recidivism for black individuals two times more frequently compared with white individuals. As such, the pretrial detention question has the necessary qualifications to test Ghysels’ asymmetric model.

Both potential decisions can have deeper societal and individual consequences. Several bodies of research have shown that imprisonment increases the psychological toll on the individual, as well as the likelihood of recidivism for that defendant. On the other hand, any crimes committed by the defendant while out on bail can bring about myriad costs to other members of society. Ghysels and his co-authors compare their asymmetric deep learning approach, using costs and benefits of pretrial detention commonly used by the legal profession, with a traditional machine learning COMPAS type of model. They find that their model reduces total cost by 10%-13%; ultimately, their model can be thought of as slightly increasing the number of false positive errors (defendants who were kept in jail when they should not have been), but this is more than offset by reducing the number of false negative errors (defendants who should have been kept in jail). In doing so, the asymmetric model lowers racial bias in pretrial detention.

Below, we offer some follow-up questions for Ghysels to further discuss the implications of his research.

How should we think of these results in the context of the explosion of large learning models such as ChatGPT?

Eric Ghysels: The situation with ChatGPT is more complex. Our research pertains to biases in binary choice problems, or what machine learning researchers call classification problems. Many algorithm-driven decisions such as fraud detection, college admissions, loan applications, hiring, etc., are binary. ChatGPT is generative AI providing answers to queries. Some decisions may be made because of the answer provided, but that link is harder to formalize. More importantly, it is the trustworthiness and accuracy of the answers provided by ChatGPT that is first and foremost the concern. There is some commonality in the problems we look at and those just mentioned regarding ChatGPT. In both cases, AI is data-driven and biases baked into data will generate output that is replicating or even amplifying such biases. In our classification problem, cost-benefit tradeoffs either neutralize or at least attenuate biases. Filtering the output of ChatGPT is more challenging.

How do you think society and regulators should approach algorithmic and unsupervised decision-making? What sort of role do you envision for regulation or oversight?

Eric Ghysels: Lawmakers and industry leaders are having conversations about regulating AI. It is the classical situation where the industry proclaims it can self-regulate, while some lawmakers advocate a more heavy-handed regulatory approach. Those discussions have many dimensions. As a starter, all generative AI models are trained using massive amounts of data. Data privacy issues are already at stake at this point. For example, the Italian government used European data protection legislation (GDPR) to ban ChatGPT. The Italian Data Protection Authority ordered OpenAI to temporarily cease processing Italian users’ data amid a probe into a possible data breach at OpenAI that allowed users to view the titles of conversations other users were having with the chatbot. The agency argued that there is no legal basis underpinning the massive collection and processing of personal data to train the algorithms on which the platform relies.

Apart from data protection, there are broader societal issues. Geoffrey Hinton, who is one of the deep learning model innovators widely used in AI, left his role at Google to speak out about the “dangers” of the technology he helped to develop. Also, tech industry leaders – including Bill Gates, Elon Musk and Steve Wozniak – signed an open letter in late March calling for a six-month halt on the development of AI systems more powerful than GPT-4, OpenAI’s latest large language model. The open letter calls on AI labs around the world to pause development of large-scale AI systems, citing fears over the “profound risks to society and humanity” they claim this software poses. I am not sure a six-month moratorium provides sufficient time to come up with good solutions, and it is obvious that there is no clear mechanism to incentivize the following of the recommendations of the open letter. Nevertheless, there are clearly enough voices speaking loudly and clearly about the need to put guardrails into place sooner rather than later.

How would the pretrial detention system benefit from application of your model? What racial justice components can the model address?

Eric Ghysels: That question brings me to a much broader topic. Some judges simply object to using any form of algorithm even in a subordinated advisory role. So, first and foremost, there is an educational hurdle in making judges – and the broader legal profession – comfortable with decision-making assisted by AI. This issue is of course not limited to the legal profession. As an educator in a business school, I try to teach the insights of AI to future generations of business leaders. My goal is to make them comfortable with making decisions guided by input from AI. Knowing the limitations and advantages of AI as well as understanding the jargon of data scientists is important. Once that hurdle is passed, then I think the implementation of our models will involve a conversation about the advantages of our setup compared with, say, the COMPAS software.

Are there other areas of society in which this technique could be readily applied?

Eric Ghysels: The short answer is – yes. We used pretrial detention because the data is in the public domain. Many other applications would involve proprietary data; for example, think of loan applications, hiring, college admissions, etc.